Neural Network: Applications, Criticism, and the Way Forward

Basics of Neural Network

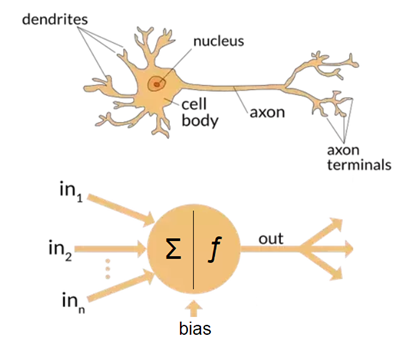

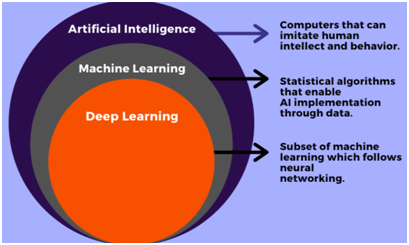

A neural network is a network or circuit of neuronscomposed of artificial neurons or nodes. A neural network is either a biological neural network, made up of real biological neurons, or an artificial neural network, for solving artificial intelligence (AI) problems. Artificial neural networksin the modern sense are computing systems vaguely inspired by the biological neural networks that constitute brain inanimals. It is based on a collection of connected units or nodes called artificial neurons, which mimic the neurons in a biological brain. Each connection, like the synapses in a biological brain, can transmit a signal to other neurons. A “neuron” in a neural network is a mathematical function that collects and classifies information according to a specific architecture.

A signal is received by an artificial neuron which isthen processed and signalled to neurons connected to it. The "signal" at a connection (called edges) is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs. The connections of the neurons and edges typically have a weight that adjusts as learning proceeds. The weight increases or decreases the strength of the signal at a connection. A positive weight reflects an excitatory connection, while a negative value means inhibitory connection.

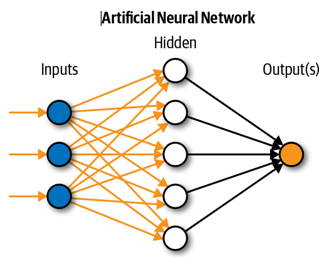

Neurons may have a threshold such that a signal is sent only if the aggregate signal crosses that threshold. Typically, neurons are aggregated into layers. Different layers may perform different transformations on their inputs. Signals travel from the first layer (the input layer), to the last layer (the output layer), possibly after traversing the layers multiple times. All inputs are modified by a weight and summed. This activity is referred to as a linear combination. Finally, an activation function controls the amplitude of the output. An acceptable range of output is usually between 0 and 1, but it could be −1 and 1.

The artificial neural networks may be used for predictive modelling, adaptive control and applications where they can be trained via a dataset. Self-learning could result from experiences within networks, which can derive conclusions from a complex and seemingly unrelated set of information. In the world of finance, Neural network assists in the development of processes such as time-series forecasting, algorithmic trading, securities classification, credit risk modelling and constructing proprietary indicators and price derivatives.

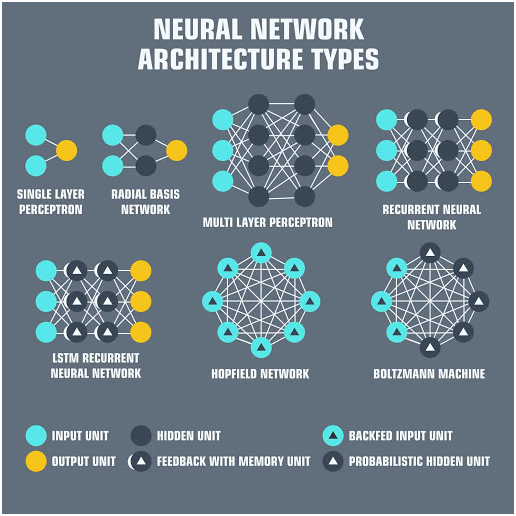

The neural network bears a strong resemblance to statistical methods such as curve fitting and regression analysis. A neural network contains layers of interconnected nodes. Each node is a perceptron and is similar to a multiple linear regression. The perceptron feeds the signal produced by a multiple linear regression into an activation function that may be nonlinear.In a multi-layered perceptron, perceptrons are arranged in interconnected layers. The input layer collects input patterns. The output layer has output signals to which input patterns may map. For example, the patterns may comprise a list of quantities for technical indicators about a security, while potential outputs could be “buy” or “sell.”

Historical perspective

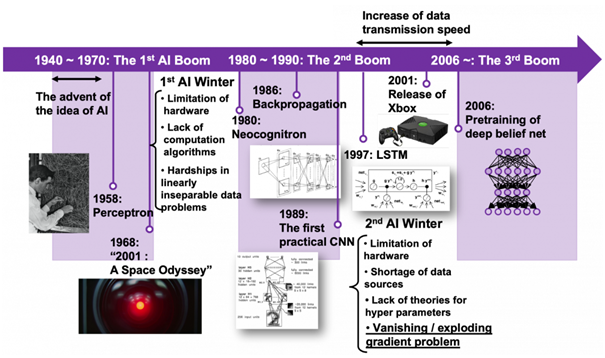

The theoretical base for contemporary neural networks was independently proposed by Alexander Bain (1873) and William James (1890). In their work, both thoughts and body activity resulted from interactions among neurons within the brain.C. S. Sherrington (1898) conducted experiments to test James's theory. McCulloch and Pitts (1943) created a computational model for neural networks based on mathematics and algorithms. Later, Donald Hebb created a hypothesis of learning based on the mechanism of neural plasticity that is now known as Hebbian learning.Farley and Clark (1954) first used computational machines, then called calculators, to simulate a Hebbian network.

Rosenblatt (1958) created the perceptron, an algorithm for pattern recognition based on a two-layer learning computer network using simple addition and subtraction. Neural network research stagnated after the publication of machine learning research by Marvin Minsky and Seymour Papert (1969). They described two key issues - a single-layer neural networks were incapable of processing the exclusive-or circuit and computers were not sophisticated enough to effectively handle the long run time required by large neural networks. The text by Rumelhart and McClelland (1986) provided a full exposition on the use of connectionism (i.e. parallel distributed processing) in computers to simulate neural processes.

The development of metal oxide semiconductor (MOS) verylargescale integration (VLSI), in the form of complementary MOS (CMOS) technology provided more processing power for the development of practical artificial neural networks in the 1980s.In 1992, max-pooling was introduced to aid 3D object recognition. Geoffrey Hinton (2006) proposed learning a high-level representation using successive layers of binary or real-valued latent variables with a restricted Boltzmann machine to model each layer. In 2012, Ng and Dean created a network that learned to recognize higher-level concepts only from watching unlabelled images. Unsupervised pre-training and increased computing power from graphics processing unit and distributed computing allowed the use of larger networks, particularly in image and visual recognition problems, which became known as "deep learning". Ciresan (2012) built the first pattern recognizers to achieve human competitive/ superhuman performance on benchmarks such as traffic sign recognition.

Applications of Neural Networks

- Neural networks could be used in different fields. The tasks to which artificial neural networks are applied tend to fall within the following broad categories.

- The first category involves Function approximation, or regression analysis, and includes time series prediction and modelling.

- The second category deals with Classification, including pattern and sequence recognition, novelty detection and sequential decision making.

- Lastly, the third category consists of Data processing, inclusive of filtering, clustering, blind signal separation and compression.

Application areas of Neural Networks include nonlinear system identification and control (vehicle control, process control), game-playing and decision making (backgammon, chess, racing), pattern recognition (radar systems, face identification, object recognition), sequence recognition (gesture, speech, handwritten text recognition), medical diagnosis, financial applications, data mining (or knowledge discovery in databases), visualization and e-mail spam filtering. For example, it is possible to create a semantic profile of user's interests emerging from pictures trained for object recognition.

In the financial world, Neural Networks are broadly used for financial operations, enterprise planning, trading, business analytics and product maintenance. Neural networks have also gained widespread adoption in business applications such as forecasting and marketing research solutions, fraud detection and risk assessment. A neural network evaluates price data and unearths opportunities for making trade decisions based on the data analysis.

Neural Networks have been used to accelerate reliability analysis of infrastructures subject to natural disasters and to predict foundation settlements. Neural Networks have also been used for building black-box models in geoscience: hydrology, ocean modelling and coastal engineering, and geomorphology. Neural Networks have been employed in cyber security, with the objective to discriminate between legitimate activities and malicious ones. For example, machine learning has been used for classifying Android malware, for identifying domains belonging to threat actors and for detecting URLs posing a security risk. Research is underway on Neural Network systems designed for penetration testing, for detecting botnets, credit cards frauds and network intrusions.

Neural Networks have been proposed as a tool to solve partial differential equations in physics and simulate the properties of many-body open quantum systems. In brain research Neural Networks have studied short-term behaviour of individual neurons, the dynamics of neural circuitry arising from interactions between individual neurons and how behaviour could arise from abstract neural modules that represent complete subsystems.

Criticism of Neural Networks

The most common criticism of neural networks in robotics is that they need a large diversity of training samples for real-world operation. However, this is not surprising, as any learning machine needs sufficient representative examples to capture the underlying structure that allows it to generalize to new cases.

Dean Pomerleau has used a neural network to train a robotic vehicle to drive on multiple types of roads (single lane, multiple lane, dirt, etc.). Majority of his research was devoted to extrapolating multiple training scenarios from a single training experience, and preserving past training diversity so that the system does not become over trained. For example, if it is presented with a series of right turns, it should not learn to always turn right. These issues are common in neural networks that must decide from amongst a wide variety of responses, but can be dealt with in several ways, for example by randomly shuffling the training samples, by using a numerical optimization algorithm that does not take too large steps when changing the network connections following an example, or by grouping examples in so-called mini-batches.

- K. Dewdney had stated in 1997 that although neural networks do solve a few problems, their powers of computation are so limited that he was surprised with anyone taking them seriously as a general problem-solving tool. Arguments for Dewdney's position are that to implement large and effective software neural networks, much processing and storage resources need to be committed. Arguments against Dewdney's position are that neural networks have been successfully used to solve multiple complex and diverse tasks, such as autonomously flying aircraft.

Although the brain has hardware tailored to the task of processing signals, simulating even the most simplified form on technology could compel a neural network designer to fill many millions of database rows for its connections, which could, consequentially, consume vast amounts of computer memory and hard disk space. Further, the designers of neural network systems will often have to simulate the transmission of signals through many of these connections and neurons, which must be matched regularly with incredible amounts of CPU processing power and time. While neural networks yield effective programs, they do so at the cost of efficiency and tend to consume considerable amounts of time and money.

Although analysing what has been learned by an artificial neural network is difficult, it is much easier than analysing what has been learned by a biological neural network. Other criticism came from believers of hybrid models that combine neural networks and symbolic approaches. They advocate the intermixing of these two approaches and believe that hybrid models can better capture the mechanisms of the human mind.

Recent improvements and the Way forward

While initially research had been concerned mostly with the electrical characteristics of neurons, a particularly important part of the investigation in recent years has been focussing on the exploration of the role of neuromodulators such as dopamine, acetylcholine, and serotonin on behaviour and learning.

Biophysical models have been important in understanding mechanisms for synaptic plasticity, and have had applications in both computer science and neuroscience. Research is on-going in understanding the computational algorithms used in the brain for radial basis networks and neural back propagation as mechanisms for processing data.

Computational devices have been created in the complementary metal oxide semiconductors (CMOS) for both biophysical simulation and neuromorphic computing. Efforts have been made for creating nanodevices for very large scale principal components analyses and convolution, the success of which could usher in a new era of neural computing that is a step beyond digital computing as it depends on learning rather than programming and is fundamentally analog rather than digital.

Recurrent neural networks and deep feed forward neural networks have been developed between 2009 and 2012 such as multi-dimensional long short term memory (LSTM). Variants of the back-propagation algorithm as well as unsupervised methods could be used to train deep, highly nonlinear neural architectures. Radial basis function and wavelet networks have been introduced. These could be used to offer best approximation properties and have been applied in nonlinear system identification and classification applications.

Deep learning feedforward networks have alternate convolutional layers and max-pooling layers, which is topped by several pure classification layers. These include Fast GPU-based implementations of this approach. Such neural networks were the first artificial pattern recognizers to achieve human-competitive or even superhuman performance on benchmarks such as traffic sign recognition or the handwritten digits problem.

The Concluding remarks

The networks’ opacity is still unsettling to theorists, but there’s headway on that front, too. Research has addressed the range of computations that deep-learning networks could execute and deep networks offer infinite advantages. Further, research has also deliberated upon the problems of global optimization, and guaranteeing that a network has found the best settings in accordance with its training data. Additionally, over fitting has been taken in to consideration and research has been constantly looking into the issue where the network becomes so attuned to the specifics of its training data that it fails to generalize to other situations of the same categories.

There are still plenty of theoretical questions to be answered, but the work of researchers could help ensure that neural networks finally break the generational cycle that has brought them in and out of favour for several decades. Neural networks are at the forefront of cognitive computing, which could perform some of the more advanced human mental functions. Deep learning systems are based on multilayer neural networks and power, such as speech recognition capability of Siri which is the mobile assistant in Apple cell phones. Combined with exponentially growing computing power and the massive aggregates of big data, deep-learning neural networks could influence the distribution of work between people and machines. Artificial intelligence, deep learning, and neural networks represent incredibly exciting and powerful machine learning-based techniques and are the technology to look out for in the upcoming future. Artificial neural networks and the more complex deep learning techniques are some of the most capable Artificial intelligence tools for solving very complex problems, and will continue to be developed and leveraged in the future. The progression of artificial intelligence techniques and applications could certainly be very exciting to watch.